[Part of a series: The Age of Steam]

The steam engine might have amounted to relatively little if not for its two compatriots, coal and iron. Together they formed a kind of triumvirate, ruling over an industrial empire. Or perhaps an ecological metaphor is more appropriate – a symbiosis among three species, each nourishing one another and forming the core of a new, mechanical ecosystem, clanking and churning with life. Or perhaps they were in fact three substances of one single organism – iron the bone and sinew, coal the lungs and heart, and steam the pneuma, the spirit, the breath of life. Perhaps, on the other hand, I’m getting carried away.

Yet the close ties between these three materials are undeniable. Steam engines first came into wide use for pumping water from coal pits, using the mine’s own product to power them. Later, engines were used to blow fresh air into the shaft and to lift ore to the surface, in mines of all types, including iron mines. Then the coal produced from the mine went on to be used as fuel to smelt iron, with the help of other steam engines to power the bellows. Some of that iron went into the construction of more steam engines. And so round and round the circle went. The pre-Socratic philosopher Anaxagoras argued that the transformation of materials – such as food becoming flesh and blood – is possible because every kind of substance already contains a share of every other substance within itself. Such seemed to be the case the case with the mutual transmutation of coal, steam, and iron in the early industrial age.

Sea Coal

Despite its sophistication compared to its immediate predecessors, the Newcomen engine was far from efficient. It guzzled fuel in order to reheat its cylinder each cycle until it was warm enough to hold steam, only to cool the cylinder again immediately with the injection of water for the next power stroke. To keep it running required vast quantities of coal.

For most of history, across the world, wood served as the primary fuel source for both domestic and industrial heat, either directly or in a pre-cooked form called charcoal. Vaclav Smil estimates that, globally, 98% of all fuel energy came from plant matter in 1800. By that time, however, Britain was extracting nine-tenths of its fuel energy from coal dug from the ground. This intensive development of coal mining was very particular to the island of Britain, not to Western Europe generally. Britain’s near neighbor and arch-rival France got only one-tenth of its fuel from coal in the Napoleonic time period.[1]

Coal was used as fuel to some extent in Britain in ancient times – archaeologists have found coal and coal ashes in sites from the Roman imperial era. But no clear evidence of the widespread and regular use of coal in Britain arises thereafter until the thirteenth century. At that point, it appears in the written record as sea coal, or carbo maris. Why “sea” coal? At the time, the term coal was used for what we now call charcoal.

Scholars have offered two explanations for why a similar black, lumpy fuel, dug from the ground, was said to come from the sea. There is no doubt that sea coal was often found washed up on the seashore, having eroded from exposed coastal seams. For example, a grant of a new tract of land extended to the monks of Newminster Abbey in Northumberland around 1236 included the right to collect sea weed and sea coal along its shore (the latter likely to fuel their saltworks).[2] However, there is another possible explanation for the name – that it was called sea coal because it arrived into London by sea from the North. Documentary evidence for this sea trade appears at almost exactly the same time – a document dated to 1228 refers to a Sacoles (Sea Coals) Lane in the suburbs of London, also known as Limeburner’s Lane, presumably after the primary customers for the new seaborne fuel.[3] I can find no firm reason to prefer one explanation over the other, so take your pick.[4]

Coal seams rose close to the surface throughout much of the island, especially in a roughly 300-mile belt across its middle from Birmingham north to Edinburgh. Britain’s gluttonous consumption of coal owed itself to the combination of this supply of easily accessible ores and the demand crated by the demographic explosion of London. Its population more than doubled in the sixteenth century and quadrupled in each of the following two centuries, reaching a million people by 1800. Providing the wood to supply all of these new inhabitants with fuel required expanding the timber catchment of London ever farther upstream (transport by water being far cheaper than by land) to more and more distant woodlands, increasing the cost of its delivery. This caused the price of wood fuel in London to triple in real terms between 1550 and 1700.[5] Industrial users of wood could perhaps move their operations elsewhere, but the inhabitants of the city could not do without fuel to heat their homes in the winter months, particularly during the so-called “Little Ice Age” of the sixteenth and seventeenth centuries.

Coal, the condensed remains of ancient ferns, Lepidodendrons and other decayed plant matter of the Carboniferous era, provided more energy per pound – considered both as weight and currency – than wood.[6] But the houses of the medieval period could not be heated with coal – the open hearths of the time filled the house with smoke, and sulfurous coalsmoke was unbearably noxious. So, a change in the architecture of English towns – the introduction of chimneys – was necessary to make it possible to substitute cheaper coal for wood.[7] This came about, not coincidentally, in the sixteenth century, just as the population of London was exploding, and the price of wood along with it.

Without the invention of chimneyed homes, London’s demographic explosion of could not have happened – the rural poor would have stayed home, rather than flocking to the city to freeze to death, unable to afford to heat their homes. Instead, cheap coal from the North began to supplant ever more dear wood in the hearths of London. By 1700, Britons were digging up nearly 3 million tons of it each year, over thirteen times as much as in 1560, though the population of the island had not even doubled in that time.[8]

This torrent of coal having been unleashed to warm the townspeople of London, enterprising Britons found many other uses for it outside the home. We have already seen the evidence that it was used for boiling salt from the sea and cooking limestone into quicklime as early as the thirteenth century. The makers of brick, tile, and glass also fed it into their kilns. As we shall shortly see, however, some industries – notably the iron industry – could not yet exploit it.

Both the owners of mines and those who descended into them had to brave many dangers to extract this strange mineral. Agricola’s De re metallica listed the many causes that could lead to the closure of a mine – the exhaustion of a vein or seam, flooding, collapse, noxious air, and so forth. He included among these evils, with the same matter of fact manner, “fierce and murderous demons, for if they cannot be expelled, no one escapes from them.”[9] The exhalations of such demons were known as “damps”, after the german Dampf, a fume or vapor. Choke damp and white damp (which we know as carbon dioxide and monoxide, respectively) might smother an individual or small group who encountered a pocket of it. But most treacherous of all was fire damp (methane), which, when ignited by a stray spark, could blast an entire mine to flinders.[10]

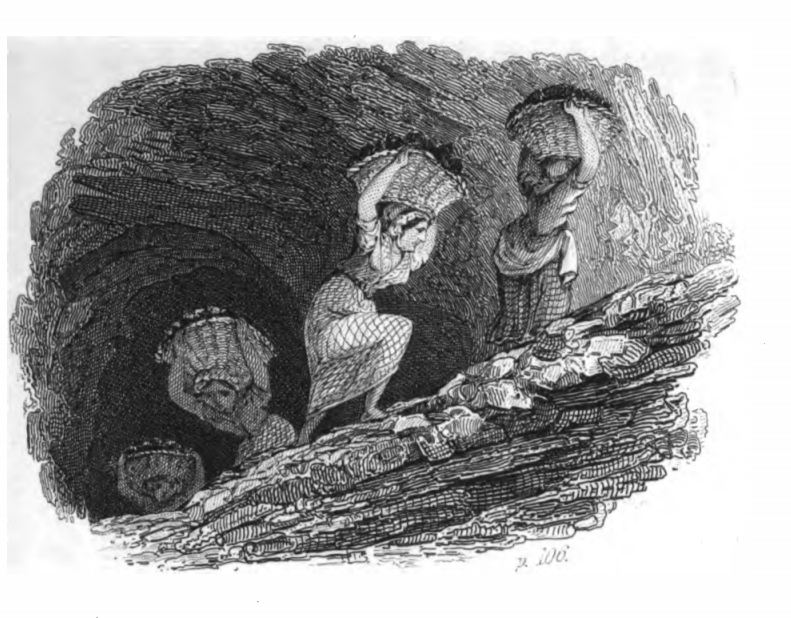

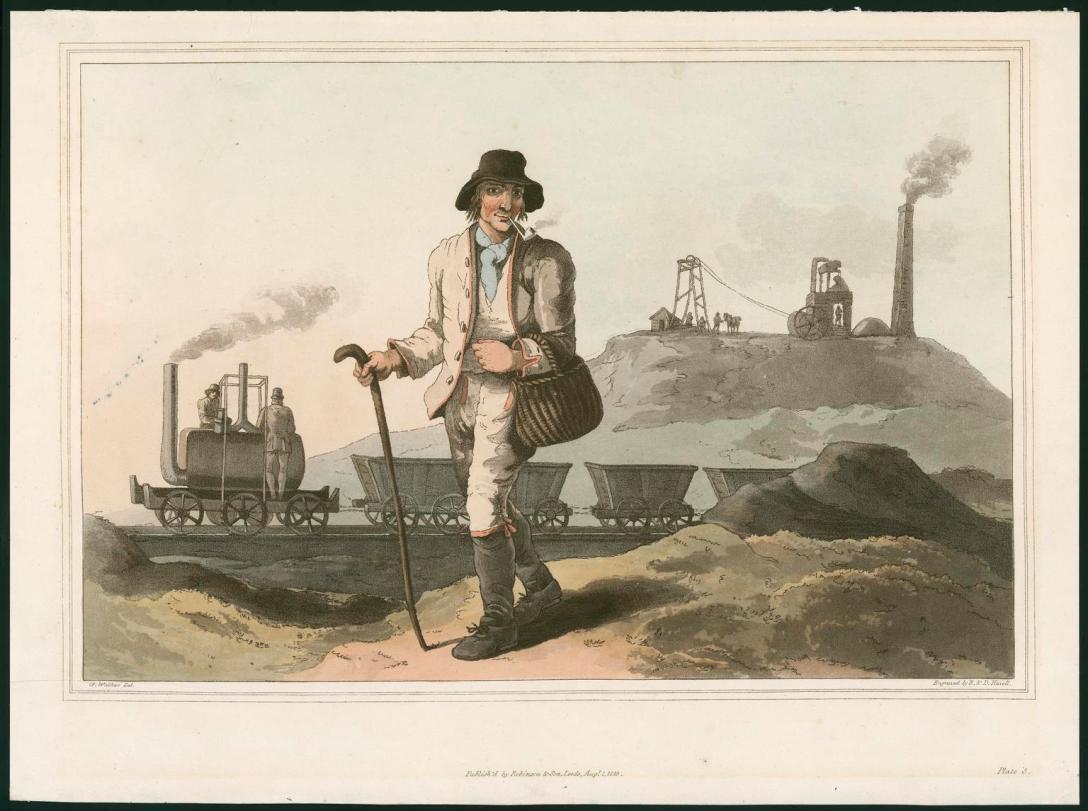

Nonetheless, the mines delved ever deeper in search of more fuel to warm the townsmen of Britain. The maximum pit depth of British coal mines reached fifty meters by the end of the seventeenth century, 100 in the early the eighteenth, and 200 by 1765. As we have already seen, this created a demand for more pumping power, which in turn brought into being the first steam engines. We may think of the steam engine as a labor-saving device in retrospect, but in its earliest incarnation it served to create labor instead – drawing up water to expose more of the coal seam to the back-breaking effort required to extract it from the earth. Women and children were employed in this effort along with men. Robert Bald, in his 1808 account of the coal trade in Scotland, described in detail the toil of women employed to repeatedly scale ladders up from the mines, hauling coal in baskets on their backs. Each raised as much as 4000 pounds to the surface in a single work day.[11]

Some historians have claimed that Newcomen’s engine, fuel-hungry as it was, could be used economically only at the pithead – actually pumping water from the same coal mine that served to stoke its boiler. The addition of any transportation costs, according to this argument, would have made it unprofitable.[12] If true, this would provide a tidy explanation for why the engine developed in Britain and nowhere else – no other place was exploiting coal as a fuel source nearly so intensively, and so no other place had access to the cheap fuel that made a first-generation steam engine worthwhile. However, this seems to be something of an exaggeration. Fragmentary evidence exists for Newcomen engines operating in Cornwall to pump water from tin mines throughout the early 1700s. This region was rich with metallic mines but lacked native coal. These operations seem to have become uneconomical and ceased operation only after the Tory government increased the excise on the coastal coal trade – coal reached Cornwall from the North across the Bristol Channel, just as it reached London via Thames Estuary.[13]

If Newcomen engines could in fact operate at some distance from a coalfield, it still seems probable that easy access to cheap fuel was a prerequisite for the adoption of the steam engine in Britain and its continued success there. At the mine itself, the savings offered by an engine were considerable. According to the canonical history of the British coal industry, it cost £900 a year to raise water at Griff Colliery, near Coventry, using a horse driven wheel. Then the owner acquired a Newcomen engine, reducing his annual expenses (after the initial capital outlay) to less than £150 a year.[14]

But a second element dug from the bowels of the earth was also crucial to the expansion of steam. Although Newcomen’s first engines used brass cylinders, later builders quickly switched to cheaper iron, and the steam engine became dependent on this fussiest of metals.

The Blast Furnace

Among the relatively small number of worked metals used in the pre-industrial period, gold could often be found in nature in its pure form. Most of the rest (such as lead, tin, and zinc) could be coaxed from the other materials bound with them by heating the ore until they melted – almost 2000 degrees Fahrenheit for copper, far less for the others. The pure metal would then pour out of the furnace in liquid form.

Iron, however, presented a much greater challenge. With a melting point of 2800 degrees, no fire could be made in antiquity capable of reducing it to liquid form. Eventually, sometime around 1000 BC, craftsmen discovered a two-step process for getting around this obstacle – first, as usual, the ore was cooked in a furnace. This did not melt it, but created a “bloom” – a lumpy, glowing hot coral of iron still mixed with unusable slag. Then, the ironworker beat the bloom repeatedly with a hammer, driving out the slag with a combination of heat and physical force. The result was “wrought” iron – a hard but malleable material suitable for hardware such as nails, locks, and chains, and tools such as sickles and hammers. A further refinement into very hard steel could be made by cooking the iron further in the presence of a carbonaceous material, but this was a fickle business, and steel could be made only in small quantities – enough for the edge of a blade, which would be wrapped around an iron core to make a sword or axe head.

Ordinary lumber would not do for smelting iron. Instead, colliers produced charcoal by piling wood around a pole, covering it in clay, and then burning it. This pre-cooked form of wood, very dry and almost pure carbon, gave off very little smoke or steam that might interfere with the smelt. It also contained half again as much energy as the same weight of wood, and a charcoal fire could reach temperatures of 1600 degrees Fahrenheit or more.[15]

As Europeans began to rely on iron more and more in the late medieval centuries, they sought out new sources of ore, some of which proved even harder to smelt than usual. This led metallurgists to build ever bigger furnaces. At some point in the fifteenth century this change in degree became a change in kind, resulting in the blast furnace, which produced a new form of iron – cast, not wrought. (In fact, this technology had been known to the Chinese for over a thousand years, but it seems to have been discovered independently in the West.)[16]

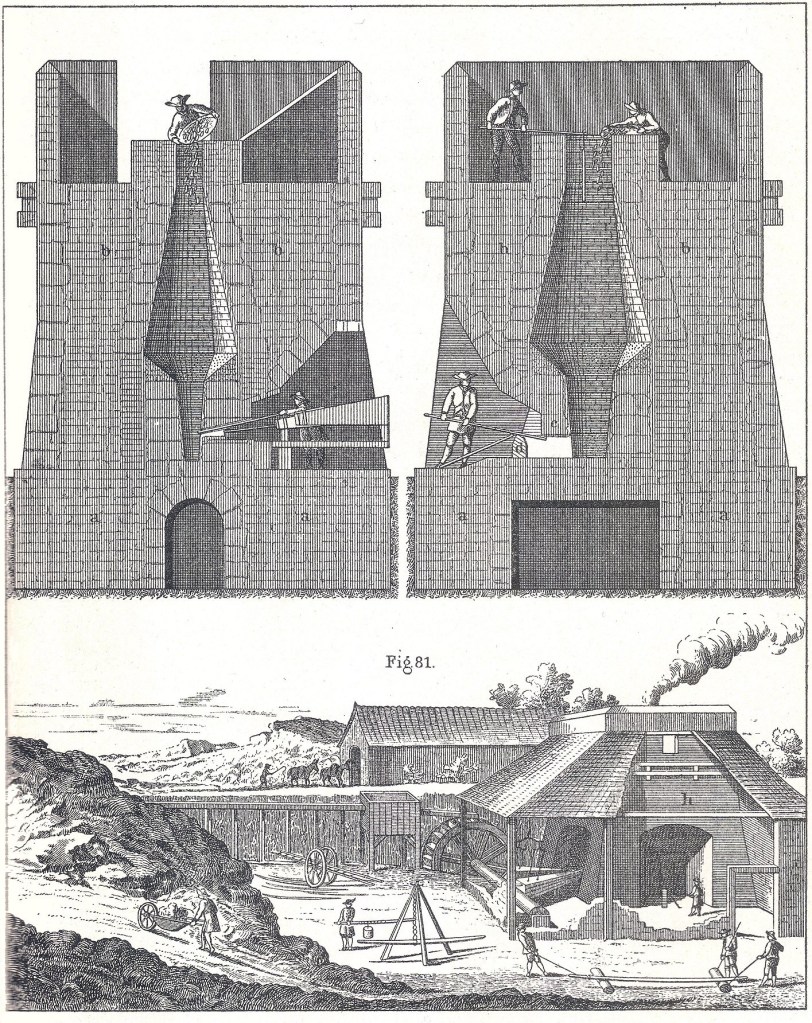

The blast furnace was fed continuously with a mix of ore, charcoal and a material called the “flux” (typically limestone). A bellows drove the temperature well beyond that of a normal charcoal fire, melting the iron completely as it absorbed carbon from the charcoal. The flux absorbed impurities in the ore and floated them to the top of the furnace. The liquified metal flowed to the bottom, where it could be drained out like beer from a keg. Because of its higher carbon content (1.5% or more of the metal’s mass, versus 0.6% or less for wrought iron), cast iron was brittle, and unsuitable for most tools and hardware. However, just like the bloom, once the cast iron solidified into “pigs,” it could be heated and beaten into ingots of wrought iron, known as bars.

The blast furnace allowed iron production on a far larger scale than ever before, and it quickly displaced the bloomery hearth.[17] Its bellows-stoked fires, however, like the Newcomen engine, had an unslakable thirst for fuel. A typical eighteenth-century British blast furnace produced 300 tons of iron in its operating season – from October to May, when flowing water could be counted upon to operate a waterwheel and drive the bellows. To keep it lit continuously during that period required approximately six square miles of woodlands (such an area could grow back enough fresh timber from the coppiced stumps for the furnace, season after season).[18]

The obvious answer to this fuel hunger was to burn “sea” coal at the furnace instead of charcoal – ironically the non-renewable fossil fuel, made from the irreplaceable husks of aeons-dead plant matter, was less dear than its freshly-grown ligneous descendants. But it would not do. The impurities in the coal, such as sulfur and phosphorus, would ruin the iron. A way around this problem was discovered in the village of Coalbrookdale, along the River Severn in West-Central England. In fact, the developments at Coalbrookdale in the first half of the eighteenth century provide an illustration in miniature of the intertwined three-way dependency among coal, iron, and steam.

Coalbrookdale

In 1708, Abraham Darby secured a lease for a derelict blast furnace at Coalbrookdale. Darby belonged to the group of dissenters from the Church of England known colloquially as the Quakers, for they were expected to trembled before God. He had made his early fortune in Bristol, a major center of the Quaker community, as a partner in a brass works with several of his co-religionists.[19]

When Darby re-ignited the old furnace, however, he used coke to fuel it, not charcoal. Coke bears the same relation to coal as charcoal does to wood (and, luckily for thirsty consumers, no relation to Coca-Cola) – both being a high-carbon residue created by cooking the original fuel in a low-oxygen environment. Coke carried fewer impurities than coal, and thus reduced the problem of contamination of the ore when used as a furnace fuel. Moreover, as a byproduct of the abundant output of the nearby Shropshire coal fields, it was substantially cheaper than charcoal. Eventually, other ironmakers imitated Darby’s innovation, which became widespread through Britain in the 1750s, making coke the dominant fuel for the smelting of British iron.

These bare facts about this timeline of the adoption of coke in iron smelting are clear enough, but two rather mysterious half-century gaps require explanation.[20] First is the gap from the first industrial application of coal to Darby’s use of it at Coalbrookdale. Raw coal could not be used for drying malt for the same reasons that prevented its used in iron-making: the chemical impurities would sour the malt. Malt millers used coke to solve this problem as early as the 1640s. So why did it take so long for this idea to jump to the iron industry?

In fact, there were several prior attempts to use coal in blast furnaces. Three explanations can be offered for why Darby produced the first known success. First, Darby himself had a background in constructing malt mills as an apprentice, before he had joined the brass works. Therefore, he was already directly familiar with the use of coke as a fuel, an unlikely circumstance for an ironmaker. Second, Darby was lucky in his neighborhood coal, which was relatively low in sulfur. Coking did not necessarily entirely eliminate such chemicals from the raw coal, so prior attempts at smelting iron using coke from inferior coal may have failed, discouraging other would-be Darbys.[21]

But the most important difference between Darby and other furnace operators was that Darby intended to use cast iron as an end in itself. One of his employees at the brass works, John Thomas, had developed a method for casting iron pots in sand molds, which Darby patented in 1707.[22] It was to exploit this patent that Darby secured a lease for the decrepit blast furnace at Coalbrookdale the following year.[23] The coke-made iron he produced was not desirable for use in finery forges for making wrought iron because of its higher silicon content, but proved perfectly suited to the cast iron products he intended to make – the silicon in fact made the iron flow more easily into the mold.[24]

Darby had meanwhile helped to establish the Quaker religious community in the area, where he became a prosperous local leader, renting a Tudor manor and starting construction on a new home for himself, where at least one Quaker meeting was held. Darby, however, was too ill to attend, and died shortly thereafter, in May 1717. The ironworks continued under Darby’s partners (his son, Abraham Darby II, being too young to inherit), and began making parts for steam engines to drain the pits of a coalmaster in the Warwickshire coal fields, about fifty miles to the east. By 1722, the Coalbrookdale works were casting whole engine cylinders, and sold ten such by the end of the decade, by which time the younger Darby had begun to take an active role. He installed his own Newcomen engine in the early 1740s, to replace an horse pump, bringing water upstream and thus allowing the furnace to run all summer, when the water flow of the local stream was unreliable. The circle of coal, iron, and steam continued to tighten.[25]

But now we find ourselves in the second gap in the coke timeline – the four decades between Darby’s innovation and the widespread adoption of coke furnaces. What happened in this time period to make coke-based smelting generally viable for making all forms of iron, not only cast iron? No evidence for any key innovation in this period can be found. The explanation for the change is instead social and political. First, there was long-standing suspicion in the industry towards the idea of coal as a fuel – many had tried it before Darby, and it had always failed. Second, there was a general depression in British iron prices in this period due to a wave of Swedish and Russian imports of bar iron. The incentives to invest in risky innovations under such circumstances were meager. This changed in 1747 when the Swedish state restricted iron output. Suddenly prices in Britain began to rise, new investments were made, and new ironworks built. These new furnaces (including Abraham II’s own new construction at Horsehay in 1754), adopted coke, and by the 1790s, only 10% of British iron was smelted with charcoal.[26]

Iron Unleashed

The substitution of coke for charcoal unleashed cheap coal on the iron production process. It also had a direct effect on blast furnace design. The brittleness of charcoal had prevented furnaces from growing too large, lest the fuel be crushed to ash under its own weight. But coke was much more resistant to compression. The scale of cast iron manufacture was thus freed from two constraints simultaneously – the price of charcoal and the necessity to be sited near a coppiced woodland for fuel, and the limitation on the scale of production imposed by the need to keep that fuel from collapsing.

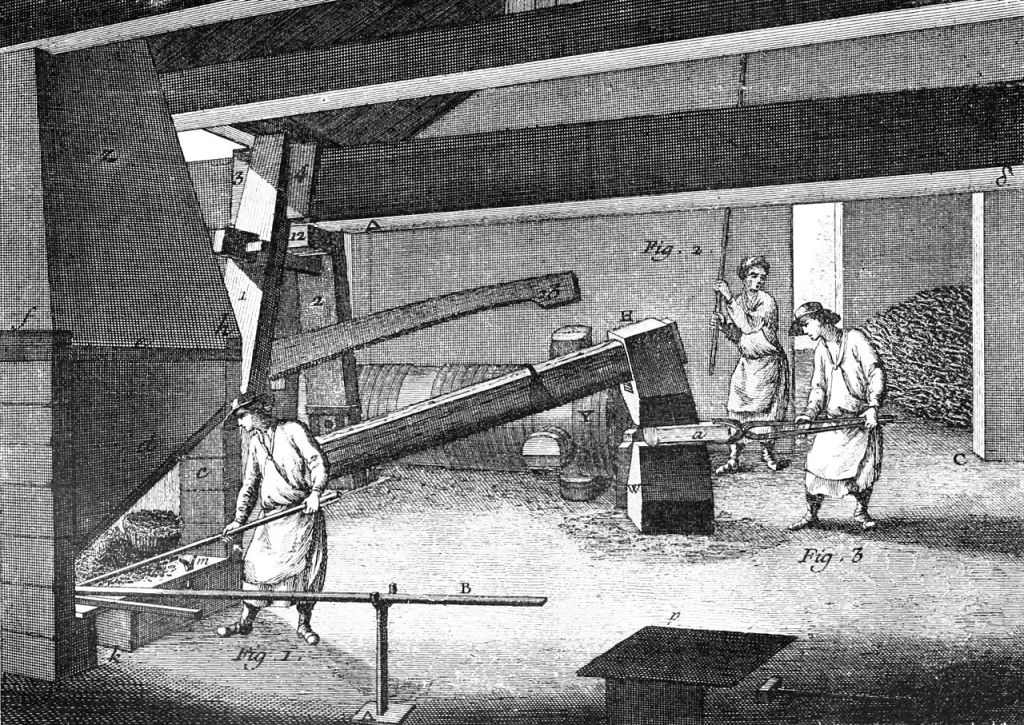

However, one last downstream constraint remained – the finery forge that beat cast iron into wrought bar iron still required charcoal and quite a lot of labor. The puddling process, developed in Britain in the 1780s, removed this last bottleneck. A puddling furnace turned cast iron into wrought simply by applying heat and stirring. This allowed the mass production of wrought iron, using coal as fuel (since the iron no longer needed to be directly exposed to the heat source). This, in turn, helped to finally resolve the metallurgical problems that had made high-pressure steam, of the sort that had burst Savery’s steam pumps, impossible to deal with.

But this is to get ahead of the story. We must now return to the history of the steam engine proper, rather than of its materials and fuel sources. We continue with the tale of the most famous and pivotal figure in that history– James Watt.

[1] Vaclav Smil, Energy and Civilization: A History (Cambridge, MA: MIT Press, 2017), 233, 268.

[2] Galloway, Annals of Coal Mining and the Coal Trade, vol. 1 (London: The Colliery Guardian Company, 1898), 21, 64.

[3] Galloway, 29-30.

[4] If I had to choose, I would lean towards the “washed up on the sea” explanation. It seems more likely that London would quickly adopt the term used in the north for their new fuel than that northern writers would be using the London term as early as 1236, when the trade was relatively young. But it’s possible that the sea coal trade was significantly older than we know.

[5] Robert C. Allen, The British Industrial Revolution in Global Perspective (Cambridge: Cambridge University Press, 2009), 84-86.

[6] Barbara Freese, Coal: A Human History (New York: Perseus Publishing, 2003), 18-20.

[7] William Harrison’s Description of England of 1577 accused the chimney of brining about a decline in health in the younger generation, who suffered from far more “rheums, catarrhs, and poses” than their elders. In his view, just as smoke hardened timber, so too the smoke that filled earlier homes had hardened the constitutions of the inhabitants. Galloway, 81.

[8] Allen, 82, 86-87.

[9] Agricola, De Re Metallica, Herbert Clark Hoover and Lou Henry Hoover, trans. (New York: Dover Publications, 1950), 217-218.

[10] Freese, 47-50.

[11] Robert Bald, A General View of the Coal Trade of Scotland (Edinburgh: A. Neill and Company, 1808), 130-34. Vaclav Smil notes that “every transition to a new form of energy supply has to be powered by the intensive deployment of existing energies and prime movers: the transition from wood to coal had to be energized by human muscles, coal combustion powered the development of oil, and… today’s solar photovoltaic cells and wind turbines are embodiments of fossil energies…,” quoted in Smil, 230.

[12] Such is the position of Allen, 162-63.

[13] James Greener, “Thomas Newcomen and his Great Work,” The Journal of the Trevithick Society (August 2015), 85-90.

[14] Galloway, 241.

[15] Smil, 165-66.

[16] Friedel, A Culture of Improvement (Cambridge, MA: MIT Press 2010), 79-84.

[17] Smil, 211-212.

[18] Smil, 214-215.

[19] Barrie Trinder, The Darbys of Coalbrookdale (London: Phillimore, 1981), 13-14.

[20] There is another pretender to the honor of being first to smelt iron using coal – Dud Dudley claimed to have done so as early as 1620. However, his claim is less well-attested, and makes no mention of the crucial ingredient of coke. There is a faint possibility that Dudley handed down some secrets to Darby via a family connection. See, Carl Higgs, “Dud Dudley and Abraham Darby; Forging New Links” (2005)[https://web.archive.org/web/20090219084159/http://www.blackcountrysociety.co.uk/articles/duddudley.htm],

[21] Trinder, 20.

[22] Trinder, 14.

[23] Trinder, 21-22.

[24] Trinder, 22.

[25] Trinder, 23, 25.

[26] Peter King, “The choice of fuel in the eighteenth-century iron industry: the Coalbrookdale accounts reconsidered,” The Economic History Review 64, 1 (February 2011), 132-156; Friedel, 188.

[…] Triumvirate: Coal, Iron, and Steam” [Creatures of Thought]. True facts on coal: “Coal was used as fuel to some extent in Britain in ancient times […]

LikeLike

There were direct effects on production, as Newcomen and Savery pioneered the use of steam engines in coal mines to pump water, lift produce and provide other support. Coal mining was able to use steam to go deeper than ever before, getting more coal out of its mines and increasing production.

LikeLiked by 1 person

[…] в 13:32 Перевод Автор оригинала: Chris McDonald Теги:железоугольпарпаровые двигатели Хабы: История IT […]

LikeLike

Newcomen and Savery’s introduction of steam engines revolutionized coal mining, enabling deeper excavation and heightened production levels. By utilizing steam power, mines could extract coal more efficiently, facilitating increased output and overall productivity.

LikeLike