On May 9, 1961, Newton Minow, newly-appointed chairman of the FCC, gave the first speech of his tenure. He spoke before the National Association of Broadcasters, a trade industry group founded in the 1920s to forward the interests of commercial radio, an organization dominated in Minow’s time by the big three of ABC, CBS, and NBC. Minow knew broadcasters were apprehensive about what changes the new administration might bring, after the activist rhetoric of JFK’s “New Frontier” presidential campaign; and indeed, after a few words of praise, he proceeded to indict the medium which his audience had created. “When television is good,” Minow said,

nothing — not the theater, not the magazines or newspapers — nothing is better. But when television is bad, nothing is worse. I invite each of you to sit down in front of your television set when your station goes on the air and stay there, for a day, without a book, without a magazine, without a newspaper, without a profit and loss sheet or a rating book to distract you. Keep your eyes glued to that set until the station signs off. I can assure you that what you will observe is a vast wasteland.

Instead of a cavalcade of “mayhem, violence, sadism, murder,” and endless commercials “screaming, cajoling, and offending” the audience, Minow advocated programming that would “enlarge the horizons of the viewer, provide him with wholesome entertainment, afford helpful stimulation, and remind him of the responsibilities which the citizen has toward his society.”

Minow did follow up on his rhetoric, but not by cracking down on existing programming. He instead threw his support behind efforts to open new avenues that would allow less commercially-driven voices to reach television audiences. The 1962 All-Channel Receiver Act, for example, which required TV manufacturers to include ultra-high frequency (UHF) receivers in their sets, opened dozens of additional channels for television broadcasting.

But another medium for distributing television, with a potential capacity well beyond that of even UHF, was already unspooling, mile-by-mile, across the American landscape.

Community Television

Cable television began as community antenna television (CATV), a means of bringing broadcast television to those not reached by the major network stations, often towns nestled in mountainous terrain. In the late 1940s, entrepreneurs began setting up their own antennas on high ground to capture broadcast signals, then amplified them and re-transmitted them through a shielded cable to paying customers below.

Cable operators soon found others ways to bring more television programming to audiences, such as importing stations from a city with broad programming options to one with few channels (from Los Angeles from San Diego, for example). Cable providers began to build out their own microwave networks to allow this kind of entertainment arbitrage, transcending their former confinement to single local markets. By 1971, about 19 million American viewers were served by cable television (out of a total population of a bit more than 200 million).1

But cable had the potential to do far more with its tethered customers than simply extend the reach of the “wasteland” emanating from the over-the-air broadcasters. Since the signals inside the cable were isolated from the outside world, it was not bound by FCC spectrum allocations – it had, for all practical purposes at the time, potentially unlimited bandwidth. In the 1960s, some cable systems had begun to exploit this capacity to offer programming centered around local events, often created by local high school or university students. The CA of community antenna, that brought television to local consumers, expanded into community access, that brought local producers to television.

In the forefront of proclaiming the potential of cable was a superficially-unlikely proponent of high technology, Ralph Lee Smith. Smith was a bohemian writer and folk musician in Manhattan’s Greenwich Village, whose website today focuses mainly on his expertise with the Appalachian dulcimer. Yet his first book, The Health Hucksters, published in 1960, revealed him to be a classic progressive, like Minow, in favor of firm government action to support the public interest. In 1970 he published an article entitled “The Wired Nation”, which he expanded into a book two years later.

In his book, Smith laid out a sketch of a future “electronic highway,” based on a report by the Electronics Industry Association (EIA), whose members included the major computer manufacturers. This highway system, a national network of coaxial cable, the EIA and Smith imagined, would wire up every television in all the homes and offices of the nations. At the hubs of this vast network of activity would be computers, and users at their home terminals would be able to send signals upstream to those computers to control what was delivered to their televisions – everything from personal messages, to shopping catalogs, to books from a remote library.

Wired Cities

In the early 1970s, MITRE Corporation headed a research project that attempted to bring this vision to life. MITRE, a non-profit spun out of MIT’s Lincoln Lab to help manage the development of the SAGE air defense system, had built up staffing in the Washington, D.C. area over the course of the previous decade, settling into a campus in McLean, Virginia. They came to the “wired nation” by way of another visionary concept of the 1960s, computer-aided instruction (CAI). CAI seemed feasible due to the emergence of time-sharing systems, which would allow each student to work at their own terminal, with dozens or hundreds of such devices connected to a single central computer center. Many academics in the 1960s believed (or at least hoped) that CAI would transform the educational landscape, making possible customized, per-student curricula, and bringing top-quality instruction to inner city and rural kids. The problem of the inner cities had become especially pressing at a time when riots raged in American cities nearly every summer, whether in Watts, Detroit, or Newark.

Among the many researchers experimenting with CAI was Charles Victor Bunderson, a psychology professor who had built a CAI lab at the University of Texas in Austin. Bunderson was working on a computer-based curriculum for remedial math and English for junior college students under an NSF grant, but the project proved more than he could handle. So he enlisted the help of David Merrill, an education professor at Brigham Young University in Utah. Merrill had done his PhD at the University of Illinois, under Larry Stolurow, creator of SOCRATES, a computer-based teaching machine. Nearby on the Urbana campus he had encountered PLATO, another early CAI project.

Together Bunderson and Merrill pitched both NSF and MITRE on a larger grant that would fund MITRE, Texas, and BYU together. MITRE would lead the project and apply its system-building expertise to the underlying hardware and software of the time-sharing apparatus. Bunderson’s Austin lab would provide the overarching educational strategy (based on learner control – direction of the pace of learning by the student) and the course software. BYU would implement the instructional content. NSF bought the concept, and provided the princely sum of five million dollars to get TICCET – Time-Shared Interactive Computer-Controlled Educational Television – off the ground.

MITRE’s design consisted of two Data General Nova minicomputers, supporting 128 Sony color televisions for the terminal output. The full student carrel also contained headphones and a keyboard, but a touch-tone telephone could also be supported as the input device. Using inexpensive minicomputers for processing and terminal equipment that was already widely available in homes would bring the overall cost of the system down and make it more feasible to deploy in schools. Under MITRE’s influence, CAI was infused with the spirit of the “wired nation.” TICCET became TICCIT, with “educational” becoming “information”. MITRE imagined a system that could deliver not just education, but social services and information of all kind to the under-served urban core. They hired Ralph Lee Smith as a consultant, and made plans for a microwave link to connect TICCIT to the local cable system in the nearby planned community of Reston, Virginia. The demonstration system went live on the Reston Transmission Company’s cable links in July 1971.

MITRE had sweeping plans in place to extend this concept to a Washington Cable System split into nine sectors across the District of Columbia, to be launched in time for the 1976 U.S. bicentennial. But in the event, the Reston system failed to live up to expectations. For all the rhetoric of bringing on-demand education and social services to the masses, the Reston TICCIT system offered nothing more than the ability to call up pre-set screens of information on the television (e.g., a bus schedule, or local sports scores) by dialing into the MITRE Data General computers. It was a glorified time-and-temperature line. By 1973, the Reston system went out of operation, and the Washington D.C. cable system was never to be. One major obstacle to expansion was the cost of the local memory needed to continually refresh the screen image with the data dispatched from the central computer. MITRE transferred the TICCIT technology to Hazeltine Corporation for commercial development in 1976, where it lived on for another decade as instructional software.

Videotex

The first major American experiment in two-way television is hard to characterize as anything but a failure. But in the same time period, the idea that the television was the ideal delivery mechanism for the new computerized services on the information age sunk firmer roots in Europe. This second wave of two-way-television, driven mainly by telecommunications giants, generally abandoned the upstart technology of cable in favor of the well-established telephone line, as the means for communication between television and computer. Though cable had a massive advantage in bandwidth, telephone held the trump card of incumbency – relatively few people had cable access, especially outside the U.S.

It began with Sam Fedida, an Egyptian-born engineer, who joined the British Post Office (BPO) in 1970. At the time, the Post Office was also the telecommunications monopoly, and Fedida was assigned to design a “viewphone” system, comparable to the Picturephone service that AT&T had just launched in Pittsburgh and Chicago. However the picturephone concept faced an enormous technical hurdle – a continuous video stream gulped down huge quantities of bandwidth, which was prohibitively expensive in the days before fiber optic cables. To be able to transmit just 250 lines of vertical resolution (half of a standard television of the time), AT&T had to levy a $150 a month base rate for thirty minutes of service, plus twenty-five cents per additional minute.

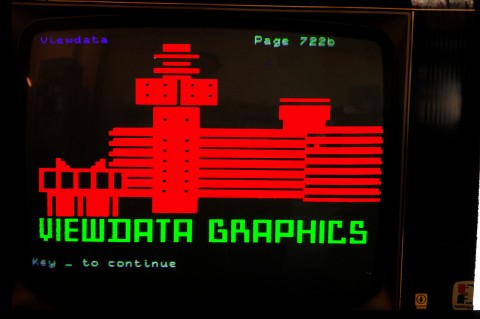

Fedida, therefore, came up with an alternative idea that would be both more flexible and less costly – not viewphone, but Viewdata.2 The Post Office could connect users to computers at its switching centers, offering information services by sending screens of data down to home television sets and receiving input back through the customer’s phone line using standard touch-tone signals. Static screens of text and simple images, which might be refreshed every few seconds at most, would use far less bandwidth than video. And the system would make use of existing hardware that most people already had in their homes, rather than the custom screens required for Picturephone.

Fedida and his successor as lead of the project, Alex Reid, convinced the BPO that Viewdata would bring new traffic, and thus more revenue, to the existing, fixed-cost telecommunications infrastructure, especially in off-peak evening hours. Thus began a trajectory away from philanthropic ideas about how interactive television could be made to benefit society, towards commercial calculations about how online services could bring in new revenue.

After several years of development, the BPO opened Viewdata to users in selected cities in 1979, under the brand name of “Prestel”, a portmanteau of press (as in publishing) and telephone. GEC 4000 minicomputers in local telephone offices responded to user’s requests over the telephone line for to fetch any of over 100,000 different ‘pages’ – screens of information stored in a database. The data came from government organizations, newspapers, magazines, and other businesses, and covered subjects from news and weather to accounting and yoga. Each local database received regular updates from a central computer in London.

The terminals rendered each page in 24 lines of 40 characters each in full color, with simple graphics composed from characters that contained simple geometric shapes. The screens were organized in a tree-like structure for user navigation, but users could also call up a particular page directly by entering its unique numeric code. The user could send information, as well as receiving it, for example to, submit a request for a seat reservation on an airplane. In the mid-1980s, Prestel Mailbox launched to the public, allowing users to directly message one another.

Contrary to the engineers’ initial intent, at launch Prestel required a custom television set with a built-in modem and other electronic hardware, likely due to resistance from the television manufacturers, who demanded a cut of the action from BPO’s incurstion in their territory. As one might guess, requiring the purchase a new, very expensive television ( £650 or more) to get started on the service was a huge impediment to gaining subscribers, and the strategy was soon abandoned in favor of cheaper set-top boxes. Nonetheless, the cost of using the system kept most potential users away – £5 a quarter for a subscription, plus the cost of the telephone call, plus a per-minute system fee during daytime hours, plus a further per-minute charge for some premium services. The system only had 60,000 subscribers by the mid-1980s, and was most popular in the travel and financial services industries, rather than for recreational use. It survived into the mid-1990s, but never broke the 100,000 subscriber mark.

Despite its struggles, however, Prestel had many competitors and imitators, with others launching similar services based on screens of text and simple graphics delivered to home televisions over telephone lines. The category was known as “videotex” and systems of that type included Canada’s Telidon, West Germany’s Bildschirmtext, and Australia’s Prestel-based Viatel. Like the BPO’s system, almost all were launched by the state-controlled telecommunications authority. Despite lacking such an organization, videotex found its way to the United States, too, where it eventually spawned a major new competitor in the information services market.

Videotex Reimagined

The story of Prodigy begins in Canada. The Communications Research Centre (CRC), a government lab in Ottowa, had been working on encoding simple graphics into a stream of text throughout the 1970s, independently of BPO’s Viewdata work. They developed a system which allowed designers to add arbitrary colored polygons to their screens, using special character codes to specify position, direction, color, and so forth. Other special characters switched the system between text and graphical modes. This allowed for richer and more intuitive graphics than the Prestel system, which built images from small, simple shapes which could not break the grid of characters.

AT&T, impressed with the flexibility of the Canadian system and freed by the FCC to compete in limited ways in the digital services market by the 1980 “Computer II” ruling, decided to try to bring videotex two-way television services to the American market. The joint CRC and AT&T standard was called NAPLPS (North American Presentation Level Protocol Syntax).

AT&T developed a terminal called Sceptre, with a modem and hardware for decoding NAPLPS, and launched videotex experiments in several different regions of the country in the early 1980s, each with different partners: Viewtron with the Knight-Ridder newspaper conglomerate in Florida, Gateway with Times-Mirror (another newspaper conglomerate) in California, and VentureOne with CBS in New Jersey. IThe Viewtron and Gateway projects both lost money and closed down in 1986. But VentureOne, although CBS and AT&T closed it down in 1983 after less than a a year of operation, laid the groundwork for a longer-lasting achievement.

Due to a new court ruling which came down as part of the 1984 breakup of Ma Bell, AT&T (now a long-distance-only enterprise divested of its local operating companies) was once again forbidden from the computer services market. CBS therefore relaunched its videotex efforts with two new partners, IBM and Sears, in 1984. They called the company Trintex, invoking the trinity of companies involved in this new videotex project. IBM, still the dominant computer manufacturer in the world by some margin, brought obvious value to the partnership. Sears would bring its retailing know-how online, and CBS its media expertise and content.3 If Viewdata began videotex’s trajectory towards commercialization, Trintex completed it.

Trintex hired David Waks, a systems engineer who had been working with computers since his undergrad days at Cornell in the late 1950s, to architect their videotex system. Waks argued that the system shouldn’t really be videotex at all, or rather that Trintex should abandon the coupling between videotex and home television. The NAPLPS protocol was a fine enough way to efficiently deliver high-resolution graphics over low-bandwidth connections – the Sceptre terminal’s modem supported only 1,200 bits per second, which was pretty good for the time. Waks even improved upon it, coming up with a system for partial refreshes, so that changes could be made to one area without re-delivering the whole screen’s content. However, the assumption that a set-top box connected to a television was the lowest-friction way to deliver online services no longer made sense, given that millions of Americans now had machines perfectly capable of decoding and displaying NAPLPS content – home computers. Trintex, Waks argued, should follow the same model as GEnie and CompuServe, using microcomputer software for the client terminal, rather than a dedicated hardware box. It’s likely IBM, maker of the IBM PC, threw their support behind the idea as well.

By the time the system launched in 1987, one of the three partners, CBS, had dropped out due financial difficulties. Trintex did not have a pleasant ring to it anyway, so the company re-branded itself as “Prodigy.” Local calls to a regional computer connected users to a data network based on IBM’s system network architecture (SNA), which routed them to Prodigy’s data center near IBM’s headquarters in White Plains, New York. A clever caching system retained frequently used data in the regional computers so that it did not have to be fetched from New York, an adumbration of today’s content delivery networks (CDNs).4

Distinguished by its ease-of-use, vibrant graphics, and a monthly pricing structure with no hourly usage fees, Prodigy quickly gained ground on its main competitors, CompuServe and GEnie (and soon America Online). The flat-rate business model, however, depended heavily on fees collected from online shopping and advertisers. Prodigy’s leaders seem somehow to overlooked that interpersonal communication, which consumed hours of computer and network time but produced no revenue, was consistently the most popular use for online services. Just as it had conformed to the technological structure of its predecessors, Prodigy was forced to follow their billing model as well, switching to hourly billing in the early 90s.

Prodigy represented simultaneously the acme and the demise of videotex technology in the United States, having derived from videotex but abandoned the idea of the television or some dedicated consumer-friendly terminal as the delivery channel. Instead it used the same microcomputer-centric approach of its successful contemporaries. When TICCIT and Viewdata were conceived, a computer was an expensive piece of machinery that individuals could scarcely aspire to own. Nearly everyone working on digital services at that time assumed they would have to be delivered from central, time-shared computers to inexpensive, “dumb” terminals, with the home television as the most obvious display device. But by the mid-1980s, the market penetration of microcomputers in the U.S. was such that a new world was coming into view. It became possible to imagine – indeed hard to deny – that nearly everyone would soon own a computer, and it would serve as their on-ramp to the “information superhighway.”

Even as videotex manqué, Prodigy was the last of its kind in the U.S. By the time it launched, all the other major videotex experiments in the country had shut down. There was another videotex system, however, which I have not yet mentioned, the most widely used of them all – France’s Minitel. Its story, and the distinctive philosophy of its launch and operation, require their own chapter in this story to elucidate. As if transforming the “boob tube” into a useful tool for self-improvement and communication were not an ambitious enough goal, Minitel sought to bend the trajectory of an entire nation, from relative decline upwards to technological supremacy.

Further Reading

Brian Dear, The Friendly Orange Glow (2017)

Jennifer Light, From Warfare to Welfare (2005)

MITRE Corporation, MITRE: The First Twenty Years (1979)

- Ralph Lee Smith, Wired Nation, 5. ↩

- The Wikpiedia article claims that Fedida was inspired by Taylor and Licklider’s 1968 article, “The Computer as a Communication Device.” An intriguing connection, if true, but one I haven’t been able to to corroborate. ↩

- Sears’ mail-order catalog infrastructure might have given it a leg up in the coming battle over the e-commerce market. Instead, it shut down its catalog in 1993 and divested its ownership in Prodigy in 1996. ↩

- Good explanations of the architecture of the Prodigy system are provided by John Markoff, “Betting on a Different Videotex Idea,” The New York Times, July 12, 1989 and Benj Edwards, “Where Online Services Go When They Die,” July 12, 2014. ↩

Nice prose again

________________________________

LikeLike

I am eagerly waiting for the Minitel article. I spent quite a lot of time on Minitels, both as a user and as admin of some services. and later, I used a Minitel as a dumb terminal in VT100 emulation mode.

LikeLike