We have now recounted, in succession, each of the first three attempts to build a digital, electronic computer: The Atanasoff-Berry Computer (ABC) conceived by John Atanasoff, the British Colossus projected headed by Tommy Flowers, and the ENIAC built at the University of Pennsylvania’s Moore School. All three projects were effectively independent creations. Though John Mauchly, the motive force behind ENIAC, knew of Atansoff’s work, the design of the ENIAC owed nothing to the ABC. If there was any single seminal electronic computing device, it was the humble Wynn-Williams counter, the first device to use vacuum tubes for digital storage, which helped set Atanasoff, Flowers, and Mauchly alike onto the path to electronic computing.

Only one of these three machines, however, played a role in what was to come next. The ABC never did useful work, and was largely forgotten by the few who ever knew of it. The two war machines both proved themselves able to outperform any other computer in raw speed, but the Colossus remained a secret even after the defeat of Germany and Japan. Only ENIAC became public knowledge, and so became the standard bearer for electronic computing as a whole. Now anyone who wished to build a computing engine from vacuum tubes could point to the Moore School’s triumph to justify themselves. The ingrained skepticism from the engineering establishment that greeted all such projects prior to 1945 had now vanished; the skeptics either changed their tune or held their tongue.

The EDVAC Report

A document issued in 1945, based on lessons learned from the ENIAC project, set the tone for the direction of computing in the post-war world. Called “First Draft of a Report on the EDVAC,”1 it provided the template for the architecture of the first computers that were programmable in the modern sense – that is to say, they executed a list of commands drawn from a high-speed memory. Although the exact provenance of its ideas was, and shall remain, disputed, it appeared under the name of the mathematician John (János) von Neumann. As befit the mind of a mathematician, it also presented the first attempt to abstract the design of a computer from the specifications for a particular machine; it attempted to distill an essential structure from its various possible accidental forms.

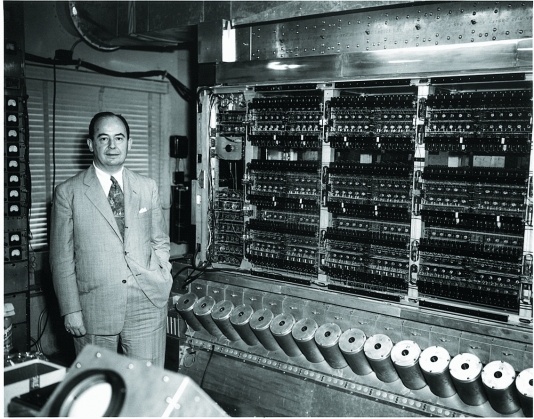

Von Neumann, born in Hungary, came to ENIAC by way of Princeton, New Jersey, and Los Alamos, New Mexico. In 1929, as an accomplished young mathematician with notable contributions to set theory, quantum mechanics, and the theory of games, he left Europe to take a position at Princeton University. Four years later, the nearby Institute for Advance Study (IAS) offered him a lifetime faculty post. With Nazism on the rise, von Neumann happily accepted the chance to remain indefinitely on the far side of the Atlantic – becoming, ex post facto, among the first Jewish intellectual refugees from Hitler’s Europe. After the war, he lamented that “I feel the opposite of a nostalgia for Europe, because every corner I knew reminds me of… a world which is gone, and the ruins of which are no solace,” remembering his “total disillusionment in human decency between 1933 and September 1938.”2

Alienated from the lost cosmopolitan Europe of his youth, von Neumann threw his intellect behind the military might of his adoptive home. For the next five years he criss-crossed the country incessantly to provide advice and consultation on a wide variety of weapons projects, while somehow also managing to co-author a seminal book on game theory. The most secret and momentous of his consulting positions was for the Manhattan Project – the effort to build an atomic weapon – whose research team resided at Los Alamos, New Mexico. Robert Oppenheimer recruited him in the summer of 1943 to help the project with mathematical modeling, and his calculations convinced the rest of the group to push forward with an implosion bomb, which would achieve a sustained chain reaction by using explosives to driving the fissile material inward, increasing its density. This, in turn, implied massive amounts of calculation to work out how to achieve a perfectly spherical implosion with the correct amount of pressure – any error would cause the chain reaction to falter and the bomb to fizzle.

Los Alamos had a group of twenty human computers with desk calculators, but they could not keep up with the computational load. The scientists provided them with IBM punched-card equipment, but still they could not keep up. They demanded still better equipment from IBM, and got it in 1944, yet still they could not keep up.

By this time, Von Neumann had added yet another set of stops to his constant circuit of the country: scouring every possible site for computing equipment that might be of use to Los Alamos. He wrote to Warren Weaver, head of Applied Mathematics for the National Defense Research Committee (NDRC), and received several good leads. He went to Harvard to see the Mark I, but found it already fully booked with Navy work. He spoke to George Stibitz and looked into ordering a Bell relay computer for Los Alamos, but gave up after learning how long it would take to deliver it. He visited a group at Columbia University that had linked multiple IBM machines into a larger automated system, under the direction of Wallace Eckert (no relation to Presper), yet this seemed to offer no major improvement on the IBM set up that Los Alamos already had available.

Weaver had, however, omitted one project from the list he gave to von Neumann: ENIAC. He certainly knew of it: in his capacity as directory of the Applied Mathematics Panel, it was his business to monitor the progress of all computing projects in the country. Weaver and the NDRC certainly had doubts about the feasibility and timeline for ENIAC, yet it is rather shocking that he did not even mention its existence.

Whatever the reason for the omission, because of it Von Neumann only learned about ENIAC due to a chance encounter on a train platform. The story comes from Herman Goldstine, the liason from the Aberdeen Proving Ground to the Moore School, where ENIAC was under construction. Goldstine bumped into von Neumann at the Aberdeen railway station in June 1944 – von Neumann was leaving another of his consulting gigs, as a member of the Scientific Advisory Committee to Aberdeen’s Ballistic Research Laboratory (BRL). Goldstine knew the great man by reputation, and struck up a conversation. Eager to impress, he couldn’t help mentioning the exciting new project he had underway up in Philadelphia. Von Neumann’s attitude transformed instantly from congenial colleague to steely-eyed examiner, as he grilled Goldstine on the details of his computer. He had found an intriguing new source of potential computer power for Los Alamos.

Von Neumann first visited Presper Eckert, John Mauchly and the rest of the ENIAC team in September 1944. He immediately became enamored of the project, and added yet another consulting gig to his very full plate. Both parties had much to gain. It is easy to see how the promise of electronic computing speeds would have captivated von Neumann. ENIAC, or a machine like it, might burst all the computational limits that fettered the progress of the Manhattan Project, and so many other projects or potential projects.3 For the Moore School team, the blessing of the renowned von Neumann meant an end to all their credibility problems. Moreover, given his keen mind and extensive cross-country research, he could match anyone in the breadth and depth of his insight into automatic computing.

It was thus that von Neumann became involved in Eckert and Mauchly’s plan to build a successor to ENIAC. Along with Herman Goldstine and another ENIAC mathematician, Arthur Burks, they began to sketch the parameters for a second generation electronic computer, and it was the ideas of this group that von Neumann summarized in the “First Draft” report. The new machine would be more powerful, more streamlined in design, and above all would solve the biggest hindrance to the use of ENIAC – the many hours required to configure it for a new problem, during which that supremely powerful, extraordinarily expensive computing machine sat idle and impotent. The designers of recent electro-mechanical machines such as the Harvard Mark I and Bell relay computers had avoided this fate for their machines by providing the computer with instructions via punched holes in a loop of paper tape, which an operator could prepare while the computer solved some other problem. But taking input in this way would waste the speed advantage of electronics: no paper tape feed could provide instructions as fast as ENIAC’s tubes could consume them.4

The solution outlined in the “First Draft” was to move the storage of instructions from the “outside recording medium of the device” into its “memory” – the first time this word had appeared in relation to computer storage5. This idea was later dubbed the “stored-program” concept. But it immediately led to another difficulty, the same that stymied Atansoff in designing the ABC – vacuum tubes are expensive. The “First Draft” estimated that a computer capable of supporting a wide variety of computational tasks would need roughly 250,000 binary digits of memory for instructions and short-term data storage. A vacuum-tube memory of that size would cost millions of dollars, and would be terribly unreliable to boot.

The resolution to the dilemma came from Eckert, who had worked on radar research in the early 1940s, as part of a contract between the Moore School and the “Rad Lab” at MIT, the primary center of radar research in the U.S. Specifically, Eckert worked on a radar system known as the Moving Target Indicator (MTI), which addressed the problem of “ground clutter”: all the noise on the radar display from buildings, hills, and other stationary objects that made it hard for the operator to discern the important information – the size, location, and velocity of moving formations of aircraft.

The MTI solved the clutter problem using an instrument called an acoustic delay line. It transformed the electrical radar pulse into a sound wave, and then sent that wave through a tube of mercury6, such that the sound arrived at the other end and was transformed back into an electrical pulse just as the radar was sweeping the same point in the sky. Any signal arriving from the radar at the same time as from the mercury line was presumed to be a stationary object, and cancelled.

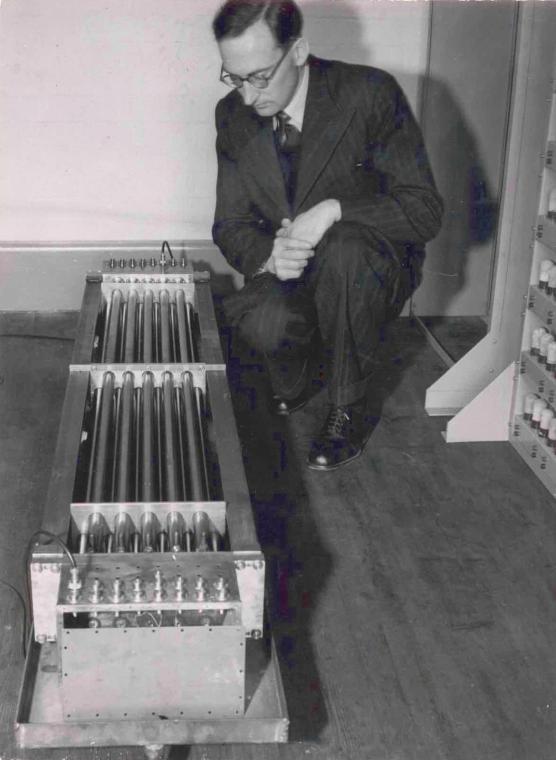

Eckert realized that the pulses of sound in the delay line could be treated as binary digits – with a sound representing 1, and its absence a 0. A single tube of mercury could hold hundreds of such digits, each passing through the line several times per millisecond, meaning that the computer need only wait a couple hundred microseconds to access a particular digit. It could access a sequential series of digits in the same tube much faster still, with each digit spaced out only a handful of microseconds a part.

With the basic problems of the how the machine would be structured resolved, von Neumann collected the group’s ideas in the 101-page “First Draft” report in the spring of 1945, and circulated it among the key stakeholders in the second-generation EDVAC project. Before long, though, it found its way into other hands. The mathematician Leslie Comrie, for instance, took a copy back to Britain after his visit to the Moore School in 1946, and shared it with colleagues. The spread of the report fostered resentment on the part of Eckert and Mauchly for two reasons: first, the bulk of the credit for the design flowed to the sole author on the draft: von Neumann7. Second, all the core ideas contained in the design were now effectively published, from the point of view of the patent office, undermining their plans to commercialize the electronic computer.

The very grounds for Eckert and Mauchly’s umbrage, in turn, raised the hackles of the mathematicians: von Neumann, Goldstine, and Burks. To them, the report was important new knowledge that ought to have been disseminated as widely as possible in the spirit of academic discourse. Moreover, the government, and thus the American taxpayer, had funded the whole endeavor in the first place. The sheer venality of Eckert and Mauchly’s schemes to profit from the war effort irked them. Von Neumann wrote, “I would never have undertaken my consulting work at the University had I realized that I was essentially giving consulting services to a commercial group.”8

Each faction went its separate ways in 1946: Eckert and Mauchly set up their own computer company, on the basis of a seemingly more secure patent on the ENIAC technology. They at first called their enterprise the Electronic Control Company, but renamed it the following year to Eckert-Mauchly Computer Corporation. Von Neumann returned to the Institute for Advance Study (IAS) to build a EDVAC-style computer there, and Goldstine and Burks joined him. To prevent a recurrence of the debacle with Eckert and Mauchly, they ensured that all the intellectual products of this new project would become public property.

An Aside on Alan Turing

Among those who got their hands on the EDVAC report through side channels was the British mathematician Alan Turing. Turing does not figure among the first to build or design an automatic computer, electronic or otherwise, and some authors have rather exaggerated his place in the history of computing machines.9 But we must credit him as among the first to imagine that a computer could do more than merely “compute” in the sense of processing large batches of of numbers. His key insight was that all the kinds of information manipulated by human minds could be rendered as numbers, and so any intellectual process could be transformed into a computation.

In late 1945, Turing published his own report, citing von Neumann’s, on a “Proposed Electronic Calculator” for Britan’s National Physical Laboratory (NPL). It delved far lower than the “First Draft” into the details of how his proposed electronic computer would actually be built. The design reflected the mind of a logician. It would have no special hardware for higher-level functions which could be composed from lower level-primitives; that would be an ugly wart on the machine’s symmetry. Likewise Turing did not set aside any linear area of memory for the computer’s program: data and instructions could live intermingled in memory, for they were all simply numbers. An instruction only became an instruction when interpreted as such.10 Because Turing knew that numbers could represent any form of well-specified information, the list of problems he proposed for his calculator included not just the construction of artillery tables and the solution of simultaneous linear equations, but also the solving of a jig-saw puzzle or a chess endgame.

Turing’s Automatic Computing Engine (ACE), was never built as originally proposed. Slow to get moving, it had to compete with other, more vigorous, British computing projects for the best talent. The project struggled on for several years before Turing lost interest. NPL completed a smaller machine with a somewhat different design, known as the Pilot ACE, in 1950, and several other early-1950s computers drew inspiration from the ACE architecture. But it had no wider influence and faded quickly into obscurity.

None of this is to belittle Turing or his accomplishments, only to place them in the proper context. His importance to the history of computing derives not from his influence on the design of 1950s computers, but rather from the theoretical ground he prepared for the field of academic computer science, which emerged in the 1960s. His early papers in mathematical logic, which surveyed the boundaries between that which is computable and that which is not, became the fundamental texts of this new discipline.

The Slow Revolution

As news about ENIAC and the EDVAC report spread, the Moore School became a site of pilgrimage. Numerous visitors came to learn at the foot of the evident masters, especially from with in the U.S. and Britain. In order to bring order to this stream of petitioners, the dean of the school organized an invitation-order summer school on automatic computing in 1946. The lecturers included such luminaries as Eckert, Mauchly, von Neumann, Burks, Goldstine, and Howard Aiken (designer of the Harvard Mark I electromechanical computer).

Nearly everyone now wanted to build a machine on the template of the EDVAC report.11 The wide influence of ENIAC and EDVAC in the 1940s and 50s evinced itself in the very names that teams from around the world bestowed on their new computers. Even if we set aside UNIVAC and BINAC (built by Eckert and Mauchly’s new company) and EDVAC itself (finished by the Moore School after being orphaned by its parents), we still find AVIDAC, CSIRAC, EDSAC, FLAC, ILLIAC, JOHNNIAC, ORDVAC, SEAC, SILLIAC, SWAC, and WEIZAC. Many of these machines directly copied the freely published IAS design (with minor modifications), benefiting from Von Neumann’s open policy on intellectual property.

Yet the electronic revolution unfolded gradually, overturning the existing order piece by piece. Not until 1948 did a single EDVAC-style machine come to life, and that only a tiny proof-of-concept, the Manchester “baby,” designed to prove out its new Williams tube memory system.12 In 1949, four more substantial machines followed: the full-scale Manchester Mark I, the EDSAC, at Cambridge University, the CSIRAC in Sydney, Australia, and the American BINAC – though the last evidently never worked properly. A steady trickle of computers continued to appear over the next five years.13

Some writers have portrayed the ENIAC as drawing a curtain over the past and instantly ushering in an era of electronic computing. This has required painful-looking contortions in the face of the evidence. “The appearance of the all-electronic ENIAC made the Mark I obsolete almost immediately (although capable of performing successfully for fifteen years afterward),” wrote Katherine Fishman.14 Such a statement is so obviously self-contradictory one must imagine that Ms. Fishman’s left hand did not know what her right was doing. One might excuse this as the jottings of a mere journalist. Yet we can also find a pair of proper historians, again choosing the Mark I as their whipping boy, writing that “[n]ot only was the Harvard Mark I a technological dead end, it did not even do anything very useful in the fifteen years that it ran. It was used in a number of applications for the navy, and here the machine was sufficiently useful that the navy commissioned additional computing machines from Aiken’s laboratory.”15 Again the contradiction stares, nearly slaps, one in the face.

In truth, relay computers had their merits, and continued to operate alongside their electronic cousins. Indeed, several new electro-mechanical computers were built after World War II, even into the early 1950s, in the case of Japan. Relay machines were easier to design, build, and maintain, and did not require huge amounts of electricity and climate control (to dissipate the vast amount of heat put out by thousands of vacuum tubes). ENIAC used 150 kilowatts of electricity, 20 for its cooling system alone.16

The American military continued to be a major customer for computing power, and did not disdain “obsolete” electromechanical models. In the late 1940s, the Army had four relay computers and the Navy five. Aberdeen’s Ballistics Research Laboratory held the largest concentration of computing power in the world, operating ENIAC alongside Bell and IBM relay calculators and the old differential analyzer. A September 1949 report found that each had their place: ENIAC worked best on long but simple calculations; the Bell Model V calculators served best for complex calculations due to their effectively unlimited tape of instructions and their ability to handle floating point, while the IBM could process very large amounts of data stored in punched cards. Meanwhile certain operations such as cube roots were still easiest to solve by hand (with a combination of table look-ups and desk calculators), saving machine time.17

Rather than the birth of ENIAC in 1945, 1954 makes a better year to mark the completion of the electronic revolution in computing, the year that the IBM 650 and 704 computers appeared. Though not the first commercial electronic computers, they were the first to be produced in the hundreds,18 and they established IBM’s dominance over the computer industry, a dominance that lasted for thirty years. In Kuhnian19 terms, electronic computing was no longer the strange anomaly of 1940, existing only in the dreams of outsiders like Atansoff and Mauchly; it had become normal science.

Leaving the Nest

By the mid-1950s, the design and construction of digital computing equipment had come unmoored from its origins in switches or amplifiers for analog systems. The computer designs of the 1930s and early 1940s drew heavily on ideas borrowed from physics and radar labs, and especially from telecommunications engineers and research departments. Now computing was becoming its own domain, and specialists in that domain developed their own ideas, vocabulary, and tools to solve their own problems.

The computer in the modern sense had emerged, and our story of the switch thus draws near its close. But the world of telecommunications had one last, supreme surprise up its sleeve. The tube had bested the relay in speed by having no moving parts. The final switch of our story did one better by having no internal parts at all. An innocuous-looking lump of matter sprouting a few wires, it came from a new branch of electronics known as “solid-state.”

For all their speed, vacuum tubes remained expensive, bulky, hot, and not terribly reliable. They could not have ever powered, say, a laptop. Von Neumann wrote in 1948 that “it is not likely that 10,000 (or perhaps a few times 10,000) switching organs will be exceeded as long as the present techniques and philosophy are employed.”20 The solid-state switch made it possible for computers to surpass this limit again and again, many times over; made it possible for computers to reach small businesses, schools, homes, appliances, and pockets; made possible the creation of the digital land of Faerie that now permeates our existence. To find its origins we must rewind the clock some fifty years, and go back to the exciting early days of the wireless.

Further Reading

David Anderson, “Was the Manchester Baby conceived at Bletchley Park?”, British Computer Society (June 4th, 2004)

William Aspray, John von Neumann and the Origins of Modern Computing (1990)

Martin Campbell-Kelly and William Aspray, Computer: A History of the Information Machine (1996)

Thomas Haigh, et. al., Eniac in Action (2016)

John von Neumann, “First Draft of a Report on EDVAC” (1945)

Alan Turing, “Proposed Electronic Calculator” (1945)

- As with ENIAC, the name EDVAC was essentially meaningless jargon massaged into a pronounceable name: Electronic Discrete Variable Automatic Computer. ↩

- Quoted in George Dyson, Turing’s Cathedral (2012), 53. ↩

- Of course, Say’s Law ensured that this massive surge in the supply of computing power would soon meet its match in demand, a process that continues down to this day. ↩

- What about Colossus, you might wonder? It took paper tape input and ran at electronic speeds, processing 5,000 characters per second in each of its five computing units, by reading the paper tape with photoelectric sensors, rather than pins. Why did electronic computer designers in the U.S. not use this method? Colossus was able to achieve this speed by spinning a loop of tape as fast as possible in one direction and consuming every row of data in order. Jumping to an arbitrary point on the tape would have required waiting for the loop to reach the right spot, an average delay of 0.5 seconds on a 5,000 line tape. ↩

- This was an intentional biological metaphor on von Neumann’s part, whose “FIrst Draft” is replete with such metaphors. He was deeply interested in the structure of the human brain, and continued to show an interest in the analogies between artificial computers and the kind of processing done by neurons until his tragic death in the mid-1950s – a cancer slowly consumed each of his physical and mental faculties over the course of a year and a half, before at last claiming his life. ↩

- Delay lines could also use other liquid media, solid crystals or even air as the medium for sound propagation. According to Burks and Burks, The First Electronic Computer: The Atanasoff Story, 285, the radar engineers borrowed the delay line idea from a physicist at Bell Labs named William Shockley, about whom we will have much more to say shortly. However I have not been able to corroborate that story, and no mention of it is made in Broken Genius, the only book-length biography of Shockley. Wikipedia states that the delay line originated in the 1920s as a means of cancelling telephone echoes (https://en.wikipedia.org/wiki/Analog_delay_line), but its only sourcing is patent records. ↩

- The basic structure of almost all modern computers — a processor that reads both instructions and data from a single memory unit — is known to this day as the “Von Neumann architecture.” Eckert argued that it should be have been called the “Eckert architecture.” ↩

- Quoted in Aspray, John von Neumann and the Origins of Modern Computing, 45. ↩

- For the opposite view, that Turing’s ideas were in fact an obligatory passage point for the modern computer, see Martin Davis, The Universal Computer (2000). Davis received his PhD in mathematics at Princeton under Alonzo Church, who had been Turing’s adviser a decade before. After completing his thesis he worked on the ILLIAC at the University of Illinois, and then had a two year stint at the Institute for Advanced Study, where he wrote programs for the IAS computer. “Martin Davis: An Interview Conducted by Andrew Goldstein,” IEEE History Center, July 18, 1991. My point of view falls more in line with Leo Curry, “Turing’s Pre-War Analog Computers,” Communications of the ACM, August 2017. ↩

- Turing’s 1936 paper “On Computable Numbers” had already explored the interconvertibility of static data and dynamic instructions. He described what was later called a “Turing machine”, and showed how such a machine could be transformed into a number and fed as input to a universal Turing machine which could interpret and execute any other Turing machine. This idea, in turn, clearly derived from Kurt Gödel’s 1931 papers on the incompleteness theorem, in which Gödel had encoded propositions of mathematical logic as numbers. ↩

- Ironically, the first electronic machine to actually run in stored-program mode was ENIAC itself, which was converted in 1948 to use instructions stored in memory. Only after this conversion (which required forever forgoing its ability to execute multiple operations in parallel), did it work successfully at its new home at the Aberdeen Proving Ground. The conversion was von Neumann’s idea, and his wife Klara and Herman Goldstine’s wife Adele did much of the design work for the coding system. A function table, originally designed to store lookup tables for complex functions (such as sine and cosine), was re-purposed to store up to 100 program instructions. One accumulator was designated to hold the address of current instruction and the address of a possible branch instruction. Haigh, et. al., Eniac in Action, 157-165. ↩

- Most of the post-EDVAC report machines switched over from delay lines to a different form of memory, which also derived from radar technology. Instead of the delay lines used to clean radar signals, it relied on the radar screen itself. The phosphorescent charge on a lit spot on the screen would alter the conductive properties of metal laid atop it. Thus, if, the radar’s cathode-ray tube (CRT) screen was covered with a metal screen, it could store a two-dimensional array of binary digits – a cell holding a charge representing a one, and a dark one a zero. This allowed much faster random access than a delay line, since there was no need to wait for the right bit to come through the tube, but presented a much more finicky engineering problem. The slightest electromagnetic interference would disrupt the CRT beam. The British engineer Frederic (F.C.) Williams, who had worked on the British end of the “moving target indicator” problem during the war, was the first to crack the puzzle and the memory unit became known as a “Williams tube.” Later in the 1950s, yet another memory technology came to the fore, known as core memory. It consisted of wire fabrics woven with tiny magnetic rings at each junction, each of which could store a single bit. More stable and reliable than delay line or CRT memory, it remained the standard for the next two decades. Not until the 1970s did the dilemma faced by Mauchly and Atanasoff in 1940 – how to store large amounts of electronic data, given the high cost of vacuum tubes – finally become moot. For by then the switch itself become cheap enough to take over the computer’s memory function as well as its logic. ↩

- https://en.wikipedia.org/wiki/List_of_vacuum_tube_computers ↩

- Katherine Davis Fishman, The Computer Establishment (1982), 37. ↩

- Aspray and Campbell-Kelly, 75. ↩

- Paul E. Ceruzzi, The Reckoners (1983), 123 ↩

- Haigh, et. al., Eniac in Action, 170-71; John N. Vardalas, The Computer Revolution in Canada (2001), 25-26. ↩

- 123 704s and almost 2,000 of the simpler 650 were built before IBM discontinued their lines in the early 1960s. (https://www-03.ibm.com/ibm/history/exhibits/650/650_intro.html; http://www-03.ibm.com/ibm/history/ibm100/us/en/icons/ibm700series/). ↩

- Thomas Kuhn, The Structure of Scientific Revolutions (1962). ↩

- John von Neumann, “The General and Logical Theory of Automata” in Cerebral Mechanisms in Behavior – The Hixon Symposium (1951). The symposium took place in 1948 but the proceedings were not published until 1951. ↩

Christmas came early, thanks! (typo: search for “resaerch” and “von Nuemann”). Looking forward to the final installment.

LikeLike

Thanks, fixed! I always have a good number of typos that slip through.

LikeLike

[…] The Electronic Computers, Part 4: The Electronic Revolution Creatures of Thought […]

LikeLike

[…] Les ordinateurs électroniques, partie 4: la révolution électronique Les créatures de la pensée […]

LikeLike

[…] The Electronic Computers, Part 4: The Electronic Revolution Creatures of Thought […]

LikeLike

[…] https://technicshistory.wordpress.com/2017/12/03/the-electronic-computers-part-4-the-electronic-revo… […]

LikeLike

[…] The Electronic Computers, Part 4: The Electronic Revolution 9 by seventyhorses | 1 comments on Hacker News. […]

LikeLike

[…] of Thought blog have a great update to their The Electronic Computers series. Part 4 details ‘the electronic revolution‘ and the backstory of ENIAC and its successor EDVAC, including some of the drama that arose […]

LikeLike

[…] [Previous part] [Next part] […]

LikeLike

Very exhaustively researched blog. Enjoyed every bit of it. Never knew so much went in the making of computer progress.

LikeLike

[…] https://technicshistory.wordpress.com/2017/12/03/the-electronic-computers-part-4-the-electronic-revo… […]

LikeLike